Kauai Labs performs ongoing research into cutting-edge electronics, sensing and software technologies, with a focus on making these technologies accessible to robotics students for use within autonomous robot navigation systems.

Our key technology focus: inexpensive electronics, sensors and advanced algorithms for precision “orientation”, “localization” (both relative and absolute) and “mapping” in both indoor and outdoor environments.

Specifically, Kauai Labs research efforts span the following technology areas:

-

- Optical Flow Sensors

- MEMS Inertial Navigation Sensors

- Optical Depth/Ranging Sensors

- Geo-positioning Sensors (notably, Indoor)

- Vehicle-to-Vehicle Communications (V2V, V2I)

- Robot Operating System (ROS)

Additionally, multi-Sensor Data Filtering/Fusion algorithms are a key research area, providing the techniques by which these individual sensing technologies may be incorporated into a robust, efficient solution.

Optical Flow Sensors

PIXArt PAA5101 Optical Flow Sensor

The PixArt PAA5101 was released in late 2018, and represents a step forward in optical flow sensing. The PAA5101 features both an IR Laser with a “speckle pattern” for illumination on smooth surfaces at up to 4 feet/second, as well as a IR LED for illumination on carpeted surfaces at up to 8 feet/second. Originally designed for indoor vacuum-cleaning robots, this sensor is an excellent fit for indoor robotics position tracking in environments including FRC and FTC. Kauai Labs has revived for 2018 the Optical Flow prototype board (now at version 5) to include both this new sensor as well as a laser distance sensor and next-generation IMU technology.

DIYDrones Optical Flow 1.0 Sensor

Sensor Data Fusion/Filtering Algorithms

Kauai Labs is researching several sensor data filtering and fusion algorithms for use in future Nav and OptiNav products. These algorithms are focused on combining complimentary sensor data to increase accuracy of individual sensors, and reducing noise and error inherent with the individual sensors. While various sources are available for implementations of these algorithms, two open-source works of particular note are the Mobile Robot Programming Toolkit and the Direction Cosine Matrix project noted below.

Extended Kalman Filter

The Extended Kalman Filter (EKF) has become the accepted basis for the majority of orientation filter algorithms and commercial inertial orientation sensors. Although EKF is computationally intensive, among other things an advantage of Kalman-based approaches is that they are able to estimate the gyroscope bias as an additional state within the system model.

Robust Estimation of Direction Cosine Matrix (2009)

The Premerlani-Brizard Robust Estimator of Direction Cosine Matrix algorithm was utilized by the Kauaibots in the 2012 “Thunder Chicken” FRC robot in order to provide reliable tip/tilt measures to the robot’s automatic bridge balancing feature. This algorithm is based on the work of Mahoney, et al., who showed that gyroscope bias drive may also be compensated for by simpler orientation filters through the integral feedback of the error in the rate of change of orientation. This algorithm appears optimal for use when integrating 3-axis magnetometer data with quaternion data from the Nav6 Open Source IMU and other Kalman filter-based algorithms.

Kauai Labs is continuing to refine this software’s performance and accuracy on an indoor robotic drive system, and is also investigating extension of this algorithm to the Optical Flow Sensor data. The results will be compared against the Extended Kalman Filter algorithm by measuring the latency, accuracy, CPU load and robustness of each approach.

Particle Filter

The Particle Filter is being studied for use in the K-OptiNav as a complement to the EKF for estimation of the Optical Flow displacement data. This filtering technique is used prominently within the Simultaneous Localization and Mapping research arena and excels at developing a running estimation of multi-variable “hidden” values such as the angular rotation values to be derived from a pair of Optical Flow sensors separate by a known distance which provide noisy displacement data.

Efficient Orientation Filter (2010)

This technique (published April, 2010):

- claims it is computationally inexpensive

- features an analytically derived and optimised gradient-descent algorithm enabling performance a sampling rates as low as 10Khz

- is applicable to both tri-axial gyro/accelerometer, as well as sensors including tri-axial magnetometers (in which case additional magnetic distortion and gyro bias drift adjustments are performed), and

- has only 1 or 2 (if tri-axis magnetometers are used) configurable parameters that can be based on observable system characteristics.

This orientation filter outputs orientation in a quaternion format, which is known to be more computationally efficient than, and also avoids the singularities inherent with, euler angle representation.

For more information, please see the Discussion of relative merits of DCM and the “Efficient Orientation Filter” on the DIYDrones Forum.

Imaging Sensors

Kauai Labs is currently working the several imaging cameras in an ongoing effort to identify for visible and infrared imaging cameras, with an eye toward finding the best performance at a lost cost.

Kauai Labs is also working with the Zed stereo imaging camera in order to calculate range to objects in the near-mid field via object recognition and triangulation.

Optical Depth/Ranging Sensors

Kauai Labs has worked for several years with the SHARP IR Range Finder; this simple, inexpensive infrared range finder can be used in conjunction with an Optical Flow sensor in order to gauge the distance to the surface on which the X/Y displacements are being measured – a key metric that can impact the accuracy of the Optical Flow-based displacement measurement.

Kauai Labs has also worked with the Vishay VCNL4010 Fully Integrated Proximity and Ambient Light Sensor, a single ~4mm^2 IC which contains an integrated LED and photo-pin-diode for calculating distance to surface, with a range of up to 20cm. Those interested in working with this IC may find the Sparkfun.

Lidar Sensors

In 2014, a new low-cost sensor was developed by a startup named “Pulsed Light”. Named the “LIDAR Lite”, this sensor is currently for sale by Garmin and offers high-performance optical distance sensing at a fraction of the cost of comparable sensors.

Lidar Lite has a range of up to 40 meters (farther than the SHARP IR Sensor, and the Kinect sensor), and features a low-cost implementation of time-of-flight algorithms used in expensive LIDAR systems like those used with the Google Car. The LIDAR-Lite uses an I2C communications interface, which allows multiple modules to be connected as slaves to a common communications bus, and draws only 100 milliamps of power.

In 2017, Kauai Labs began working with the Scanse Sweep, a fast scanning LIDAR based upon the Lidar Lite sensors which can perform multiple 360 degree scans per second.

Moving ahead, Kauai Labs is investigating the new solid-state LIDAR sensors recently announced for use in low-cost automomous vehicle systems, and as these sensors become mainstream and lower in price they are expected to be useful in low-cost robotics systems.

Indoor Localization Sensors

Kauai Labs is currently studying a new product from Broadcom: the BCM4752 GNSS Receiver. This product was just announced and has some new features which could advance the quality of indoor localization. Details are still sparse, but the product marketing information available so far indicates this integrated GNSS Receiver, Wifi/Bluetooth receiver and processor is designed to also accept input from inertial navigation sensors like those found in K-Nav – providing faster “time to lock” than today’s current GPS systems and also promising usability indoors. If the “Indoor Absolute Position” promise pans out, Kauai Labs expects to begin research in earnest to prototype the integration of the BCM4752 into the Nav and potentially even the OptiNav product lines.

Vehicle-to-Vehicle COmmunications (V2v, v2i, v2x)

Kauai Labs is researching developments in the DSRC (Dedicated Short Range Communications) technology and the 802.11p standard, for use in robot-to-robot communications. In February 2014, the National Highway Traffic Safety Administration (NHTSA) announced it would be developing a regulatory proposal for mandatory inclusion of vehicle-to-vehicle communications technology in light commercial vehicles. Regulatory action in this area appears to be increasing and it’s seemingly only a matter of time before this technology is pervasive in our vehicles and on our roads.

V2V is an ideal technology enabling robot-to-robot communication, enabling task coordination and cooperation, in addition to enhancing vehicle localization.

RELATED RESEARCH PAPERS

Experimental results of a Differential Optic-Flow System.

This paper provides experimental results of the performance of a differential optic flow system (using two optical flow sensors) in both an indoor and outdoor environment. These experiments found that the system performed better within the outdoor setting than the indoor setting due to the rich textures and good lighting conditions found within an outdoor environment.

Dual Optical-Flow Integrated Inertial Navigation

This paper discusses a real-time visual odometry system based on dual optical-flow systems and its integration to aided inertial navigation aiming for small-scale flying robots. To overcome the unknown depth information in optic-flows, a dual optic-flow system is developed. The flow measurements are then fused with a low-cost inertial sensor using an extended Kalman filter. The experimental results in indoor environment are presented showing improved navigational performances constraining errors in height, velocity and attitude.

Mobile Robot Localization Using Optical Flow Sensors

Two position estimation methods are developed in this paper, one using a single optical flow sensor and a second using two optical sensors. The single sensor method can accurately estimate position under ideal conditions and also when wheel slip perpendicular to the axis of the wheel occurs. The dual-sensor method can accurately estimate position even when wheel slip parallel to the axis of the

wheel occurs. Location of the sensors is investigated in order to minimize errors caused by inaccurate sensor readings. Finally, a method is implemented and tested using a potential field based navigation scheme. Estimates of position were found to be as accurate as dead-reckoning in ideal conditions and much more accurate in cases where wheel slip occurs.

Characterisation of Low-cost Optical Flow Sensors

This paper presents the development/chracterization of a low-cost optical sensor module for localization, using CMOS sensors found in optical mice w/modified optics. The paper proposes a mathematical model to transform the sensory data into data suitable for sensor fusion. Testing presented herein shots that the transformation successfully removes error bias, and the model also allows the variance of residual errors to be estimated – allowing data with lower image quality to be used for estimation, extending the useful range of distances of the optical sensor module, and allowing more accurate estimation of velocity.

Using Arduino for Tangible Human Computer Interaction (Fabio Varesano, April 2011).

This Master’s thesis work covers use of the Arduino platform and development environment, fundamentals of electronic circuit theory, the PCB design and fabrication process, the fundamentals of Orientation sensing, as well as the FreeIMU and Femtoduino projects.

PROTOTYPES

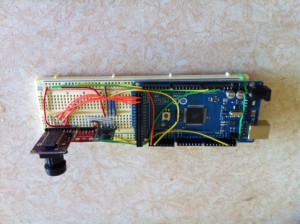

Here’s the original KOptiNav prototype board, comprised of an Arduino Mega2560 with the DIYDrones Optical Flow v1.0 sensor board, the Pololu Mini-IMU9 AHRS board and the Sparkfun VCNL4000 Breakout board. This prototype development effort include work to develop the software for acquiring and fusing the data, and acquiring data which which to optimize the optical design when used at a range of distances and surface types.

Here’s the original KOptiNav prototype board, comprised of an Arduino Mega2560 with the DIYDrones Optical Flow v1.0 sensor board, the Pololu Mini-IMU9 AHRS board and the Sparkfun VCNL4000 Breakout board. This prototype development effort include work to develop the software for acquiring and fusing the data, and acquiring data which which to optimize the optical design when used at a range of distances and surface types.

A newer (version 5) prototype develop is underway using the PixArt PAA5101 sensor, we’ll post more details when they’re available.

RELATED PATENTS

US 2010/0118123 A1, “Depth Mapping using Projected Patterns”, PrimeSense. This patent describes the key technology utilized within the Microsoft Kinetic to derive a depth map “point cloud”.

Whatever seriously encouraged u to compose “Research

| Kauai Innovation Labs”? I personallyabsolutely enjoyed it!

Thanks for your time ,Harlan

I have read some excellent stuff here. Certainly value

bookmarking for revisiting. I surprise how much effort

you put to make such a great informative web site.